Ada Boost algorithm

AdaBoost, short for Adaptive Boosting, is a powerful ensemble learning algorithm that combines the predictions of multiple weak classifiers to create a strong classifier. The algorithm iteratively trains weak classifiers on subsets of the data, assigning higher weights to the samples that were misclassified in the previous iteration. In each subsequent iteration, AdaBoost adjusts the weights of the samples based on their classification accuracy, focusing on the misclassified samples to improve overall performance. This adaptive nature allows AdaBoost to effectively handle complex classification tasks by leveraging the strengths of multiple weak classifiers and providing accurate predictions. By continually adjusting the weights and combining the weak classifiers, AdaBoost constructs a final strong classifier that can generalize well and make accurate predictions on unseen data.

Our Implementation

In this project, we employed the AdaBoost ensemble learning algorithm to tackle a classification problem. AdaBoost combines multiple weak learners, represented by decision trees with a maximum depth of 10 in this case, to create a powerful classifier. To optimize the AdaBoost model's performance, we utilized GridSearchCV to fine-tune hyperparameters, specifically the number of estimators and the learning rate. By conducting a 5-fold cross-validation over different combinations of hyperparameters, we identified the best-performing AdaBoost classifier.

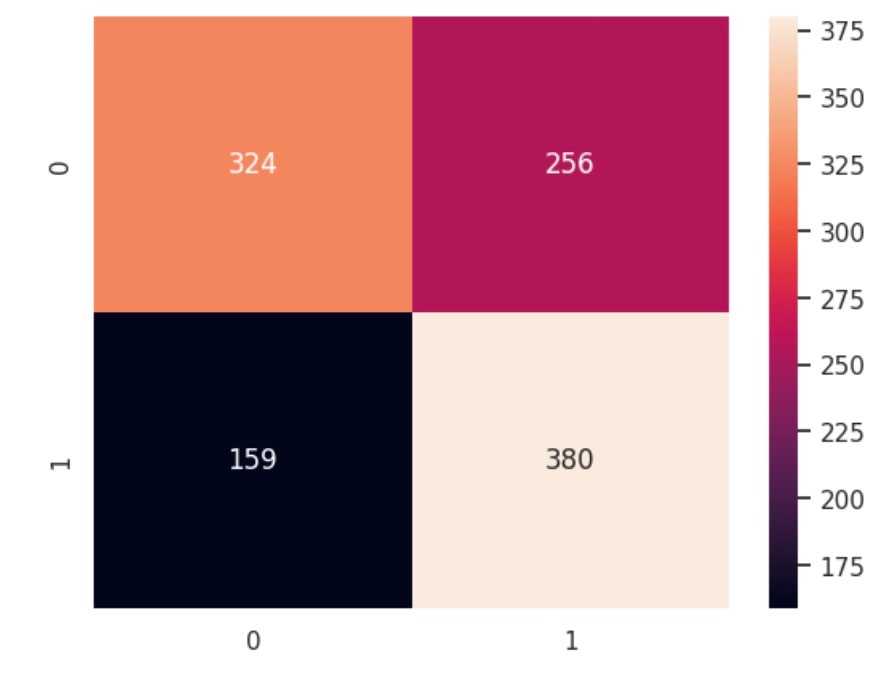

After training the model on our provided dataset, we evaluated its performance on a test set using accuracy, confusion matrix, and classification report metrics. The accuracy score gave us an overall understanding of the model's correctness, while the confusion matrix provided deeper insights into its true positive, true negative, false positive, and false negative predictions. Additionally, the classification report offered a detailed breakdown of precision, recall, and F1-score for each class, enabling us to assess the classifier's performance on individual classes. Armed with the best AdaBoost classifier and its evaluation metrics, we can confidently deploy this model in real-world applications that require accurate classification, such as image recognition, spam filtering, or medical diagnosis, with the assurance of its robustness and effectiveness.

Confusion matrix: